Export Rockset Data

The guides below have been designed to help with exporting data and metadata from Rockset. If you need assistance with anything, please contact Rockset's customer support team at [email protected] or by creating a support ticket.

For any questions regarding off-boarding or business operations upon the announcement of OpenAI’s acquisition of Rockset, please see the Announcement FAQs.

Export Metadata into JSON files

Follow the steps below to export your Rockset organization’s metadata into JSON files by running the rockset_org_metadata Python script. This script retrieves metadata from a Rockset organization and saves it into JSON files. It supports the following Rockset endpoints:

- Users

- Collections

- Integrations

- Query Lambdas (abbrev.

lambdas) - Aliases

- Views

- Workspaces (abbrev.

ws)

The script also includes options for debugging, verbosity, and limiting the number of collections processed.

All of the steps below are also listed in GitHub here.

Requirements

- Python 3.9 or higher

requestslibrarytqdmlibrary

Step 1: Create a Virtual Environment

Create and activate a Python virtual environment to install dependencies.

python3 -m venv venv

source venv/bin/activate

Step 2: Download the Metadata Script

Download the script rockset_org_metadata.py from GitHub here .

Step 3: Execute the Script

Run the script with the required --apiKey argument. Additional optional arguments are available for debugging, verbosity, and limiting the number of collections processed.

python3 rockset_org_metadata.py --apiKey $ROCKSET_API_KEY [--debug] [--verbose] [--limit COLLECTION_LIMIT]

Arguments

-apiKey: (Required) Your Rockset API key.-debug: (Optional) Enable debug mode for troubleshooting.-verbose: (Optional) Enable verbose mode for displaying exceptions.-limit: (Optional) Limit the number of collections processed.

Directory Structure

The script will create a rockset_org directory and save the JSON files for each endpoint in this directory.

rockset_org/

├── rockset_org_users.json

├── rockset_org_collections.json

├── rockset_org_integrations.json

├── rockset_org_lambdas.json

├── rockset_org_aliases.json

├── rockset_org_views.json

└── rockset_org_ws.json

Sample Outputs

Query Lambdas (`rockset_org_lambdas.json`)

{

"data": [

{

"workspace": "Example_Workspace",

"last_updated_by": "[email protected]",

"last_updated": "2024-06-25T10:00:00Z",

"name": "SampleQueryLambda",

"version_count": 1,

"collections": [

"Example_Workspace.SampleCollection"

],

"latest_version": {

"workspace": "Example_Workspace",

"created_by": "[email protected]",

"created_by_apikey_name": null,

"created_at": "2024-06-25T10:00:00Z",

"name": "SampleQueryLambda",

"version": "123abc456def7890",

"description": "This is a sample query lambda description.",

"sql": {

"query": "SELECT * FROM Example_Workspace.SampleCollection WHERE condition = :condition;",

"default_parameters": [

{

"name": "condition",

"type": "string",

"value": ""

}

]

},

"collections": [

"Example_Workspace.SampleCollection"

],

"state": "ACTIVE",

"stats": {

"last_executed": "2024-06-25T12:00:00Z",

"last_executed_by": "[email protected]",

"last_execution_error": null,

"last_execution_error_message": null

},

"public_access_id": null

}

}

]

}

Views (`rockset_org_views.json`)

{

"data": [

{

"path": "example_workspace.sample_view",

"name": "sample_view",

"description": "This is a sample view.",

"workspace": "example_workspace",

"creator_email": "[email protected]",

"created_by_apikey_name": null,

"owner_email": null,

"query_sql": "SELECT * FROM example_workspace.sample_collection WHERE condition = :condition;",

"entities": [

"example_workspace.sample_collection"

],

"state": "CREATED",

"created_at": "2024-06-25T10:00:00Z",

"modified_at": "2024-06-25T10:00:00Z"

}

]

}

Collections (`rockset_org_collections.json`)

{

"data": [

{

"created_at": "2024-05-13T21:49:31Z",

"created_by": "[email protected]",

"rrn": "rrn:col:usw2a1:f70c0660-ce29-4fdc-bc51-988f638aa885",

"name": "sample_collection",

"workspace": "example_workspace",

"status": "READY",

"sources": [

{

"s3": {

"bucket": "example-bucket",

"region": "us-west-2",

"prefix": "sample_data",

"object_count_total": 100,

"object_bytes_total": 123456789,

"settings": {

"s3_scan_frequency": "PT5M"

}

},

"id": "example-source-id",

"integration_name": "example-integration",

"status": {

"state": "WATCHING",

"last_processed_at": "2024-05-13T21:55:00Z",

"total_processed_items": 100,

"data_downloaded_bytes": 123456789,

"documents_downloaded": 100

}

}

],

"stats": {

"doc_count": 100,

"total_size": 123456789,

"total_index_size": 123456789

},

"field_mappings": [],

"field_mapping_query": {

"sql": "SELECT *\nEXCEPT (_meta)\nFROM _input"

},

"fields": [

{

"name": "timestamp",

"type": "timestamp"

},

{

"name": "id",

"type": "string"

},

{

"name": "name",

"type": "string"

},

{

"name": "value",

"type": "float"

}

]

}

]

}

Example: Debug with Collection Limit

The following example will export your Rockset org metadata with debug and other informative messaging sent to the terminal while iterating only over your first ten collections.

python3 rockset_org_metadata.py --apiKey $ROCKSET_API_KEY --debug --verbose --limit 10

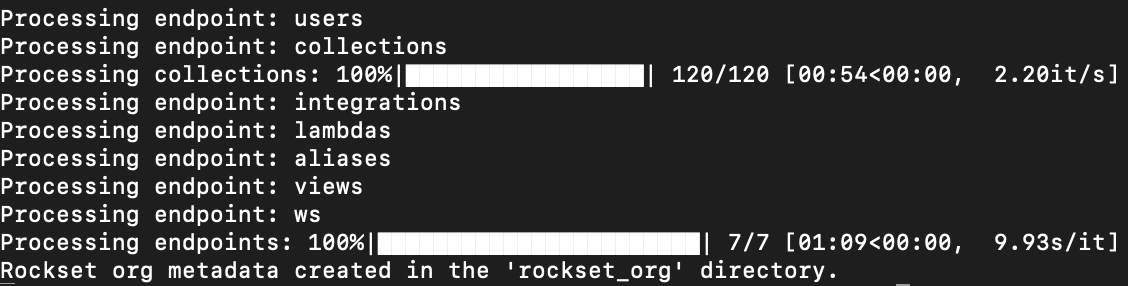

Expected Terminal Output

Step 4: Exit the Virtual Environment

To deactivate the virtual environment, use the following command:

deactivate

Export Collection Data using INSERT INTO s3

You can use our INSERT INTO s3 feature to export collection data from Rockset and write it directly to Amazon S3.

Example

Say you have a collection analytics in workspace commons . An example query using INSERT INTO s3 to export all data from commons.analytics to the s3 path s3://analyticsdata/query1 would look like the following:

INSERT INTO 's3://analyticsdata/query1'

INTEGRATION = 's3export'

SELECT * FROM commons.analytics

See our INSERT INTO s3 documentation for more information, requirements, and examples.

Export Collection Data Using Script-Generated cURL Request

Follow the below steps to export your data from Rockset. These steps involve using a script to create a custom cURL request that utilizes our INSERT INTO s3 feature to export data from Rockset and write directly to Amazon s3.

All of the steps below are also listed in GitHub here .

Requirements

- Python 3.x

- Rockset API Key

- Write access to an S3 bucket and appropriate IAM Policy/Roles configured

- S3 Bucket must be created in the same region as the Rockset collection

Recommendations

- Before exporting data from Rockset, stop all ingestion and processes (e.g. Query Lambdas)

- For most, it may be easiest to create a single S3 bucket for all exported collections

- Different workspaces and collections can be exported to different paths in that bucket

- Use “async” query mode for large amounts of data

- The query must execute within 30 minutes - you can batch your export into multiple smaller exports if you exceed this time limit

- Export to Parquet will generally be faster for larger datasets as a tradeoff to JSON being easier to read

Step 1: Download the Script

Download the script we have already created to assist in exporting collection data, titled export_RSCollection_to_AWSS3.py , available in GitHub here.

Step 2: Execute the Script

You will now execute the script to export your collection data. The following examples create an output file named export_mycollection_script.sh.

AWS IAM Role and Policy with read/write access required

The below examples assume you already have an AWS IAM Role and Policy set up for Rockset with read/write access. If you do not already have these, follow the instructions for configuring a Policy here and for configuring a Role here.

Option 1: Use a Rockset Integration

Recommended option

This example uses a Rockset S3 Integration. You can use an existing valid integration or create a new one in the Integrations tab of the Rockset console by following the setup instructions in our doc here. You will then use this integration name when executing the script.

python3 export_RSCollection_to_AWSS3.py \

--output_file export_mycollection_script.sh \

--param_RS_region usw2a1 \

--param_RS_apikey myRSAPIKey \

--param_RS_wsdotcollectionname prod.mycollection \

--param_RS_outputformat JSON \

--param_AWS_S3bucketuri s3://myS3bucket \

--param_RS_integrationname myRS_S3_IntegrationName

Option 2: Use an AWS IAM Role and AWS External ID

This example uses an AWS IAM Role and AWS External ID instead of a Rockset Integration. You will input these values directly in the script.

python3 export_RSCollection_to_AWSS3.py \

--output_file export_mycollection_script.sh \

--param_RS_region usw2a1 \

--param_RS_apikey myRSAPIKey \

--param_RS_wsdotcollectionname prod.mycollection \

--param_RS_outputformat JSON \

--param_AWS_S3bucketuri s3://myS3bucket \

--param_RS_AWSROLE_credentials myIAMroleARN \

--param_RS_AWSEXTID_credentials myExternalID

Command Line Arguments

-output_file(required): Name of the output file that will contain the generated text-param_RS_region(required): The Rockset region your collection exists.-param_RS_apikey(required): Your Rockset API key.-param_RS_wsdotcollectionname(required): The name of your Rockset workspace.dot.collection. E.g. myproductionenv.mycollection—param_RS_outputformat(required): JSON or PARQUET—param_AWS_S3bucketuri(required): Your S3 bucket uri—param_RS_AWSROLE_credentials (optional): Either param_RS_integrationname or param_RS_AWSROLE_credentials must be provided.param_RS_AWSEXTID_credentials (optional): Used in conjunction with param_RS_AWSROLE_credentials, Any external ID that is the AWS IAM Role—param_RS_integrationname (optional): Either param_RS_integrationname or param_RS_AWSROLE_credentials must be provided.—param_AWS_S3outputchunksize(optional): 1000 is the default if not specified—param_RS_querysynchronous(optional): FALSE is the default if you provide nothing; that is to say that an asynchronous query is the default and your script will return you a query_id immediately while the query is still running at Rockset in the background; but if you provide TRUE your query will run synchronously and block until success or failure—param_RS_adv_filtercollection_byID(optional): This is advanced feature and only accepts an integer of 1 or 2. If you have a really large dataset, you might need to filter the collection to export it by _id using one of the 16 hex values starting the GUID. If you select 1, then the output file will contain 16 queries that will run sequentially. If you select 2, then the output file will contain 256 queries (16^2) that will run sequentially. Keep this in mind if you are running against large datasets.

Output

Executing either of the above example options will create a file named with the generated cURL POST command and SQL statement. When you run the script, the collection data will be exported from Rockset and written directly to S3.

The default query mode for this execution is asynchronous. We recommend running this async to ensure that you do not hit any query timeouts. When running async, you will see a response like this:

{"query_id":"a63d329a-964e-45d3-a314-30ca0f43ed6a:kBotPiX:0","status":"QUEUED","stats":{"elapsed_time_ms":9,"throttled_time_micros":9000}}

Once the request has completed, the results in S3 will look like the following:

Amazon S3 → Buckets → myS3bucket → prod.mycollection → a63d329a-964e-45d3-a314-30ca0f43ed6a_kBotPiX_0 → Documents

Note that the a63d329a-964e-45d3-a314-30ca0f43ed6a_kBotPiX_0 value here is a randomly generated query ID from Rockset that identifies your query with the exported results in S3.

Updated 9 months ago