CI/CD Testing

When you develop an application which relies on Rockset, you naturally want to test the queries, but doing so in a fast and repeatable way can be challenging due to the nature of testing with SaaS.

Types of testing

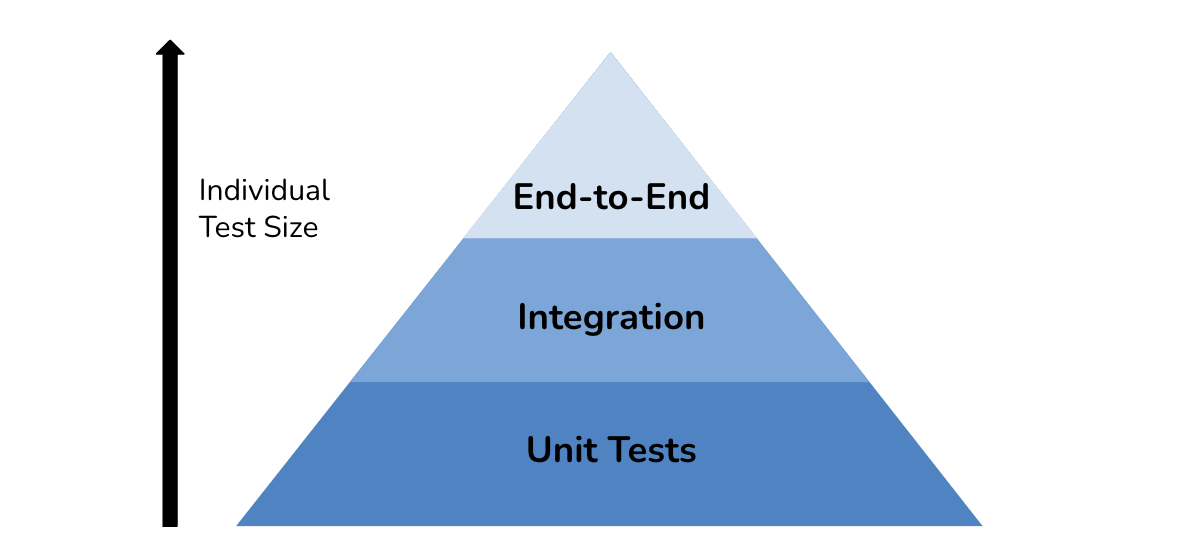

There are many types of tests, and in this document we will cover unit, integration and end to end tests.

The testing pyramid is a conceptual model that represents an ideal distribution of different types of software tests across various levels of the testing process. It emphasizes a structured approach to testing, with a focus on having a larger number of lower-level tests (unit tests) and progressively fewer tests as you move up the pyramid. The testing pyramid typically consists of three main layers: unit tests, integration tests, and end-to-end tests.

- Unit Tests:

- Description: At the base of the pyramid, unit tests are the foundation. They are small, focused tests that validate the functionality of individual units or components of the software in isolation.

- Characteristics:

- Fast execution.

- High granularity (specific to small units of code).

- Low maintenance overhead.

- Purpose: Ensure that each unit of code works as expected and identify issues early in the development process.

- Integration Tests:

- Description: The middle layer of the pyramid focuses on integration tests, which verify the interactions and collaboration between different units or components. Integration tests ensure that the integrated parts work together seamlessly.

- Characteristics:

- Test interactions between components.

- Moderate execution time.

- Moderate granularity.

- Purpose: Detect issues related to the integration of components and validate the overall behavior of the system when its parts are combined.

- End-to-End Tests:

- Description: At the top of the pyramid, end-to-end tests (E2E tests) simulate the entire user journey or workflow through the application. These tests validate the system's functionality from the user's perspective, covering all components and interactions.

- Characteristics:

- Slow execution.

- High-level granularity.

- Test the application as a whole.

- Purpose: Ensure that the application works as intended in real-world scenarios, catching issues related to user interactions, integrations, and overall system behavior.

The testing pyramid promotes a balanced testing strategy where the majority of tests are low-level (unit tests), providing rapid feedback during development. As you move up the pyramid, the number of tests decreases, and the tests become more complex, covering broader aspects of the application. This approach aims to catch defects early in the development cycle, reduce testing costs, and improve the overall quality of the software.

It's important to note that the testing pyramid is a guideline, and the exact distribution of tests may vary based on project requirements, technology stack, and other factors. However, the general principle of emphasizing more lower-level tests and fewer higher-level tests remains a valuable concept in software testing.

Unit tests

Unit tests should test individual components (units) of software in isolation, where other components uses mocks/stubs/doubles, and returns a canned response for any interaction with another component.

Pros

- Early Detection of Bugs:

Unit tests help identify and fix bugs early in the development process, preventing them from becoming more complex and expensive to resolve later. - Isolation of Issues:

By testing individual units (small, isolated parts of code), unit tests make it easier to identify and isolate specific issues within the codebase. - Documentation:

Unit tests serve as living documentation, providing insights into the intended functionality of the code. New developers can refer to the tests to understand how components are expected to behave. - Regression Testing:

Unit tests act as a safety net, ensuring that changes or updates to the codebase do not introduce new bugs or negatively impact existing functionality (regression testing). - Continuous Integration Support:

Unit tests are well-suited for integration into continuous integration (CI) pipelines, allowing for automated testing of code changes as part of the development process. - Improved Code Design:

Writing unit tests often encourages developers to create modular and loosely coupled code, promoting better design practices.

Cons

- Time-Consuming:

Writing and maintaining unit tests can be time-consuming, especially for large codebases, and may slow down the development process. - Incomplete Test Coverage:

Achieving comprehensive test coverage can be challenging, as it may be impractical to test every possible code path, leading to potential blind spots in testing. - Dependency on Implementation Details:

Tests may become tightly coupled to the implementation details of the code, making it harder to refactor or change the internal structure without breaking tests. - False Sense of Security:

Relying solely on unit tests can create a false sense of security, as they may not catch all types of errors or issues, such as integration problems or performance issues. - Maintenance Overhead:

Maintaining unit tests requires ongoing effort, especially when the codebase evolves. Failure to keep tests up to date can lead to inaccurate results and decreased effectiveness. - Not a Substitute for System Testing:

Unit tests are only one part of the testing strategy and should not be considered a substitute for comprehensive system testing, including integration and end-to-end testing.

Integration tests

Integration tests focuses on the interactions between different components or modules.

Pros

- Identifying Interaction Issues:

Integration tests help uncover issues related to the interactions between different components, such as incorrect data passing, communication problems, or compatibility issues. - Realistic Scenario Testing:

Integration tests simulate more realistic scenarios by testing how different parts of the system work together. This can help catch problems that may not be apparent in isolated unit tests. - Verification of Interfaces:

Integration tests verify that the interfaces between components are functioning as expected, ensuring that data is exchanged correctly and adheres to specified protocols. - Reduced Risk of System Failures:

By addressing the integration between components, integration tests reduce the risk of system failures caused by misunderstandings or miscommunications between different parts of the software. - Comprehensive Coverage:

Integration tests contribute to more comprehensive test coverage by examining how different components interact in various scenarios, including error conditions and edge cases. - Continuous Integration Support:

Integration tests can be integrated into continuous integration (CI) pipelines, allowing for automated testing of the entire system as changes are made to the codebase.

Cons

- Complex Setup and Execution:

Setting up and executing integration tests can be more complex compared to unit tests. Dependencies between components need to be managed, and test environments must be carefully configured. - Difficulty in Isolating Issues:

When integration tests fail, pinpointing the exact cause of the failure can be challenging, as issues may stem from the interaction of multiple components. Debugging and isolating problems may require more effort. - Time-Consuming:

Integration tests can be time-consuming, especially as the system grows in complexity. This can impact the speed of the development cycle, making it important to balance thorough testing with development efficiency. - Limited Scope for Isolation:

Unlike unit tests, integration tests involve multiple components, making it difficult to isolate and test a single unit in isolation. This limitation may hinder pinpointing the source of a problem. - Dependency on External Systems:

Integration tests may depend on external systems, databases, or services, introducing additional factors that can affect the reliability and consistency of the tests. - Incomplete Coverage:

Achieving complete coverage in integration testing can be challenging. Some interactions may be difficult to simulate, leading to potential blind spots in testing.

End to end test

End-to-end (E2E) tests are designed to simulate the user's interaction with the entire application, covering multiple components, services, and user interfaces. Like any testing approach, E2E tests come with their own set of advantages and disadvantages:

Pros

- Realistic User Scenarios:

E2E tests simulate real user scenarios, providing a comprehensive view of how the application behaves in a production-like environment. - Holistic Validation:

E2E tests validate the entire system, including the user interface, backend services, and any external integrations, ensuring that all components work together seamlessly. - Detection of Integration Issues:

E2E tests are effective at detecting integration issues that may arise when different parts of the system interact with each other. - User Experience Validation:

E2E tests help verify that the user experience is consistent and meets the requirements, including navigation, data input, and feedback to the user. - End-to-End Workflow Testing:

E2E tests are particularly useful for testing end-to-end workflows and critical user journeys, ensuring that the entire process functions as intended. - Comprehensive Coverage:

E2E tests provide a high level of test coverage, as they touch multiple layers of the application and involve various components. - Regression Testing:

E2E tests serve as powerful regression tests, helping to catch issues that may arise when new features or changes are introduced to the application.

Cons

- Complexity and Maintenance:

E2E tests can be complex to set up and maintain, especially as the application evolves. Changes in the user interface or functionality may require frequent updates to the tests. - Execution Time:

E2E tests typically have longer execution times compared to unit or integration tests. This can slow down the testing and development cycle. - Limited Isolation:

E2E tests operate at a high level, making it challenging to isolate the cause of failures to specific components. Debugging and identifying issues may require additional effort. - Dependencies on External Factors:

E2E tests may be influenced by external factors, such as network conditions, browser versions, or external services, which can introduce variability and affect test consistency. - Resource Intensive:

Running E2E tests may require significant resources, including dedicated testing environments and realistic datasets, which can add to the overall testing cost. - Not Ideal for All Tests:

E2E tests might not be suitable for all testing scenarios, especially those requiring rapid feedback during development. Unit and integration tests are often more appropriate for such cases.

Organization

When organizing your testing with Rockset, you have two primary options. You can opt for two distinct organizations—one for production and another for development. Alternatively, you can utilize a single organization with Role-Based Access Control (RBAC) to segregate the data. Each approach has its own set of advantages and disadvantages, as outlined below.

Multiple organizations

Utilizing separate organizations ensures complete segregation, but it may incur additional costs when testing on identical data present in the production organization. This is because the data must be ingested and stored twice, contributing to increased expenses.

One organization

When testing on production data, opting for a single organization and leveraging Role-Based Access Control (RBAC) proves to be a more efficient choice. By creating a role specifically for tests, queries can be executed against the production data without the need to ingest the same data twice, thereby mitigating the cost of storing redundant copies.

To restrict access to sensitive fields, it is recommended to employ a view. This approach not only enhances security but also ensures that only the necessary data is exposed during testing.

To prevent any impact on production traffic, consider utilizing a separate virtual instance or dynamically creating an instance when tests need to run. This approach helps maintain the integrity of production processes while enabling thorough testing on the relevant data.

Testing

When dealing with any Software as a Service (SaaS) solution, local testing is often impractical since running a local copy is not possible. Consequently, reliance on the execution of real queries becomes essential. However, there are strategies to work around this limitation and optimize the speed of the tests.

Organizing Tests for Enhanced Speed and Efficiency

Efficient test organization is pivotal for optimizing the speed and effectiveness of your testing processes. Well-structured test suites not only facilitate quicker execution but also contribute to better maintainability and scalability. Here are key practices to organize your tests and expedite the testing lifecycle:

- Categorize Tests

Group tests based on their nature, such as unit tests, integration tests, and end-to-end tests. This allows for targeted testing at different levels of the application, ensuring a balance between granularity and coverage. - Parallel Test Execution:

Leverage parallel execution capabilities to run multiple tests simultaneously. This is especially beneficial for large test suites, significantly reducing the overall testing time. - Dependency Management:

Organize tests to manage dependencies efficiently. Ensure that tests requiring the same setup or shared resources are grouped together. This reduces redundancy and enhances the reliability of test outcomes. - Test Data Management:

Streamline test data management by organizing and centralizing datasets. Utilize fixtures or factories to create and share common test data, avoiding unnecessary duplication and improving data consistency. - Prioritize Critical Tests:

Identify critical or high-priority tests and run them first. This approach ensures that crucial functionalities are validated promptly, providing immediate feedback on essential aspects of the application. - Isolation of Unit Tests:

Keep unit tests isolated from external dependencies, such as databases or external APIs. Mock or stub external interactions to prevent unnecessary delays caused by external factors. - Selective Test Execution:

Implement mechanisms for selective test execution. Allow developers and testers to run specific subsets of tests related to the changes they are working on, promoting faster feedback loops during development. - Continuous Integration (CI) Integration:

Integrate your test suites into your continuous integration pipeline. Automated CI/CD processes ensure that tests are executed consistently with every code change, uncovering issues early in the development lifecycle. - Test Parallelization Frameworks:

Explore test parallelization frameworks that enable distributing tests across multiple environments or machines. This approach is particularly effective for speeding up end-to-end tests that involve interactions with the entire application stack. - Efficient Resource Allocation:

Optimize resource allocation for testing environments. Utilize dedicated testing environments with configurations similar to production, ensuring realistic testing scenarios without compromising speed.

By adopting these organizational strategies, you can create a testing framework that not only accelerates test execution but also enhances the overall efficiency and reliability of your testing processes.

Unit tests

When working with a Software as a Service (SaaS) solution and implementing unit tests, it's common to avoid making actual API calls to the SaaS provider during unit testing. Instead, you can use various techniques to isolate your code and decouple it from external dependencies. Here's a general approach:

-

Dependency Injection:

Inject dependencies into your code rather than directly referencing external services. For example, if your code interacts with a SaaS API, create an interface for the API interactions and inject that interface into your code. During unit tests, you can use a mock or a fake implementation of this interface.

-

Mocking Frameworks:

Use mocking frameworks to create mock objects that simulate the behavior of external dependencies. This allows you to replace the actual calls to the SaaS API with simulated responses. Popular mocking frameworks include Mockito (for Java), unittest.mock (for Python), and Moq (for .NET).

-

Mocking HTTP Requests:

For code that interacts with external APIs over HTTP, you can use libraries that allow you to mock HTTP requests. See below for a detailed description.

-

Test Doubles:

- Implement test doubles such as fakes, stubs, or mocks for external dependencies. A fake implementation may simulate the behavior of the SaaS solution without making actual network calls.

-

Fixture Data:

- Provide fixture data that mimics the expected responses from the SaaS solution. Use this data within your unit tests to simulate different scenarios and responses.

Remember that while unit tests are crucial for testing individual units of code, you should complement them with other types of tests (integration tests, end-to-end tests) to ensure comprehensive coverage and validate the interaction of your components with the actual SaaS solution.

Request/response recording

One way to avoid having to make calls to the SaaS solution to execute tests, is to use a request/response recorder (often called VCR), which will replay recorded requests when the contents of the request matches a previously recorded request.

VCR testing refers to a specific type of testing related to HTTP interactions, often used in the context of automated testing for applications that make external API calls. The term "VCR" is derived from the idea of recording and replaying HTTP interactions, similar to how a VCR (videocassette recorder) records and plays back video content.

In VCR testing, the goal is to record real HTTP interactions between the application and external APIs during a test run and then store them in a "cassette" (a file or set of files). Subsequent test runs can use these recorded interactions, replaying them from the cassette rather than making live API calls. This approach has several benefits:

- Speed: Testing with recorded interactions is generally faster than making live API calls, as it eliminates the need to connect to external services.

- Consistency: By using recorded interactions, tests are more consistent because they execute with the same data and responses each time, reducing variability in test outcomes.

- Isolation: VCR testing allows tests to focus on the application's behavior without relying on the availability or consistency of external APIs.

- Reduced External Dependencies: Since VCR testing doesn't rely on live API calls, it minimizes dependencies on external services and avoids issues related to rate limits, network instability, or changes in external API behavior.

Here's a basic workflow of VCR testing:

- Recording Phase:

- During the initial test run, the VCR captures and records the HTTP interactions between the application and external APIs.

- Cassette Storage:

- The recorded interactions are stored in a "cassette" file, which is typically a text file containing the details of the requests and responses.

- Replaying Phase:

- In subsequent test runs, instead of making live API calls, the VCR replays the interactions from the cassette file.

- Assertions:

- Tests include assertions to verify that the application behaves as expected based on the recorded interactions.

- Updating Cassettes:

- Periodically, the cassette files may need to be updated to reflect changes in the expected behavior of the external APIs or to incorporate new features.

Tools such as VCR for Ruby, Betamax for Java, or cassette libraries in various testing frameworks facilitate VCR testing by providing utilities to record, store, and replay HTTP interactions.

While VCR testing offers advantages, it's essential to use it judiciously. Over-reliance on recorded interactions may result in overlooking potential issues that could arise in a live environment. Therefore, VCR testing is often used in conjunction with other types of tests, such as unit tests and integration tests, to ensure comprehensive test coverage.

Implementations

There are implementations available for most languages, and below are libraries for the clients Rockset provides:

- Go: https://github.com/seborama/govcr

- Python: https://github.com/kevin1024/vcrpy

- Java: https://github.com/EasyPost/easyvcr-java

- Node: https://github.com/philschatz/fetch-vcr

Test fixtures

When running tests, it's essential to have a predefined set of data, often referred to as test fixtures, against which expectations can be asserted. For instance, one might expect that a query like SELECT COUNT(*) should return 10 rows.

There are two viable approaches for establishing these fixtures. One option is to employ a shared fixture that is used across all tests. Alternatively, each test can independently add and remove the necessary documents as part of its setup and teardown process.

While the shared setup is straightforward and requires no additional explanation, the per-test setup and teardown approach involves more intricate details. In this method, each test is responsible for its own fixture management. During the setup phase, the test adds the required documents or data needed for its specific scenario. After the test execution, the teardown phase removes any temporary data created during the test, ensuring a clean and isolated environment for each test case.

This per-test setup/teardown strategy offers greater autonomy to individual tests, allowing them to customize their fixture requirements. However, it also demands careful consideration to avoid dependencies or conflicts between tests and to ensure proper cleanup after each test execution.

- Before All:

- Action: Create a collection.

- Run Tests:

a. Before Each Test:

- Action: Add documents to the collection.

b. Run Test:

- Action: Execute the test.

c. After Each Test:

- Action: Delete the added documents. - After All:

- Action: Delete the entire collection.

This sequence ensures that a fresh collection is created before any tests begin. For each individual test, documents are added to the collection before the test is run. After each test, the added documents are removed to maintain a clean state. Finally, after all tests are completed, the entire collection is deleted, leaving no residual data or artifacts from the test suite.

Note the importance of using the "fence API" during the document addition, modification and deletion process to ensure synchronization and wait until the operation has been successfully propagated through the system.

Terraform

You can use terraform to setup permanent test fixtures, like custom roles, integrations, workspaces and collections, plus external resources like AWS S3 buckets and DynamoDB instances.

Taking the time to create automated setup for fixtures usually pays off, as it is possible to validate that the fixtures are correct.

Examples

There are a number of real world examples of testing strategies in the Rockset GitHub org which you can look at.

Rockset go client

The Rockset go client uses both fakes for unit tests, and VCR to record full request/response fro the REST API.

Fakes

In the Rockset Go client, the UntilVirtualInstanceActive() method within the wait module is tested using a fake implementation. Let's delve into an example of how this is achieved:

// UntilVirtualInstanceActive waits until the Virtual Instance is active.

func (w *Waiter) UntilVirtualInstanceActive(ctx context.Context, id string) error {

return w.rc.RetryWithCheck(ctx, ResourceHasState(ctx, []option.VirtualInstanceState{option.VirtualInstanceActive}, nil,

func(ctx context.Context) (option.VirtualInstanceState, error) {

vi, err := w.rc.GetVirtualInstance(ctx, id)

return option.VirtualInstanceState(vi.GetState()), err

}))

}

This method performs iterative calls to retrieve the state of the virtual instance until it reaches the ACTIVE state.

The corresponding test seen below, for this method returns the INITIALIZING state on the first call and the ACTIVE state on the second call. The test then asserts that there were no errors during this process and confirms that the GetVirtualInstance() method was invoked exactly twice.

func TestWait_untilVirtualInstanceActive(t *testing.T) {

ctx := context.TODO()

rs := fakeRocksetClient()

rs.GetVirtualInstanceReturnsOnCall(0, openapi.VirtualInstance{

State: stringPtr(option.VirtualInstanceInitializing)}, nil)

rs.GetVirtualInstanceReturnsOnCall(1, openapi.VirtualInstance{

State: stringPtr(option.VirtualInstanceActive)}, nil)

err := wait.New(&rs).UntilVirtualInstanceActive(ctx, "id")

assert.NoError(t, err)

assert.Equal(t, 2, rs.GetVirtualInstanceCallCount())

}

The fakeRocksetClient() function generates a mock Rockset client with an identical interface to the authentic client. It incorporates a modified retry mechanism, deviating from the typical exponential backoff strategy. This adaptation is based on the rationale that employing exponential backoff to slow down API calls is unnecessary when dealing with fake calls to the API during testing. Instead, a different retry approach is utilized for improved efficiency in the testing context.

// return a fake Rockset client with an ExponentialRetry that doesn't back off

func fakeRocksetClient() fake.FakeResourceGetter {

return fake.FakeResourceGetter{

RetryWithCheckStub: retry.Exponential{

MaxBackoff: time.Millisecond,

WaitInterval: time.Millisecond,

}.RetryWithCheck,

}

}

VCR

The test suite for the virtual instances in the Rockset client uses VCR to replay previously recorded API request/response, and with previously recorded responses, the whole test suite takes alittle more 1 second.

$ VCR_MODE=offline go test -v -run TestVirtualInstance

=== RUN TestVirtualInstance

=== RUN TestVirtualInstance/TestExecuteQuery

=== RUN TestVirtualInstance/TestGetCollectionMount

=== RUN TestVirtualInstance/TestGetVirtualInstance

=== RUN TestVirtualInstance/TestGetVirtualInstance/29e4a43c-fff4-4fe6-80e3-1ee57bc22e82

=== RUN TestVirtualInstance/TestGetVirtualInstance/rrn:vi:usw2a1:29e4a43c-fff4-4fe6-80e3-1ee57bc22e82

=== RUN TestVirtualInstance/TestListCollectionMounts

=== RUN TestVirtualInstance/TestListQueries

=== RUN TestVirtualInstance/TestListVirtualInstances

--- PASS: TestVirtualInstance (0.00s)

--- PASS: TestVirtualInstance/TestExecuteQuery (0.00s)

--- PASS: TestVirtualInstance/TestGetCollectionMount (0.00s)

--- PASS: TestVirtualInstance/TestGetVirtualInstance (0.00s)

--- PASS: TestVirtualInstance/TestGetVirtualInstance/29e4a43c-fff4-4fe6-80e3-1ee57bc22e82 (0.00s)

--- PASS: TestVirtualInstance/TestGetVirtualInstance/rrn:vi:usw2a1:29e4a43c-fff4-4fe6-80e3-1ee57bc22e82 (0.00s)

--- PASS: TestVirtualInstance/TestListCollectionMounts (0.00s)

--- PASS: TestVirtualInstance/TestListQueries (0.00s)

--- PASS: TestVirtualInstance/TestListVirtualInstances (0.00s)

=== RUN TestVirtualInstanceIntegration

=== RUN TestVirtualInstanceIntegration/TestVirtualInstance_0_Create

virtual_instances_test.go:137: vi 910f722f-be0c-48ba-a61f-785c6695c3c0 / go_vi is created (17.24275ms)

virtual_instances_test.go:142: vi 910f722f-be0c-48ba-a61f-785c6695c3c0 is active (49.958µs)

=== RUN TestVirtualInstanceIntegration/TestVirtualInstance_1_Collection

virtual_instances_test.go:153: workspace go_vi is created (3.774292ms)

virtual_instances_test.go:158: workspace go_vi is available (44.292µs)

virtual_instances_test.go:164: collection go_vi.go_vi is created (206.042µs)

virtual_instances_test.go:169: collection has documents 94.542µs

=== RUN TestVirtualInstanceIntegration/TestVirtualInstance_2_Mount

virtual_instances_test.go:179: collection go_vi.go_vi is mounted on 910f722f-be0c-48ba-a61f-785c6695c3c0 (3.023417ms)

virtual_instances_test.go:184: collection go_vi.go_vi is ready (43.333µs)

virtual_instances_test.go:189: mount is active: 38.25µs

=== RUN TestVirtualInstanceIntegration/TestVirtualInstance_3_Query

virtual_instances_test.go:200: query ran (127.538792ms)

virtual_instances_test.go:205: listed queries (75.834µs)

=== RUN TestVirtualInstanceIntegration/TestVirtualInstance_4_Unmount

virtual_instances_test.go:216: listed mounts (3.31ms)

virtual_instances_test.go:222: unmounted (22.5µs)

=== RUN TestVirtualInstanceIntegration/TestVirtualInstance_5_Suspend

virtual_instances_test.go:232: vi is suspending (3.062458ms)

virtual_instances_test.go:237: vi is suspended (27.708µs)

virtual_instances_test.go:242: vi is resuming (25.125µs)

virtual_instances_test.go:247: vi is active (38.5µs)

virtual_instances_test.go:255: vi updated (48.375µs)

--- PASS: TestVirtualInstanceIntegration (0.16s)

--- PASS: TestVirtualInstanceIntegration/TestVirtualInstance_0_Create (0.02s)

--- PASS: TestVirtualInstanceIntegration/TestVirtualInstance_1_Collection (0.00s)

--- PASS: TestVirtualInstanceIntegration/TestVirtualInstance_2_Mount (0.00s)

--- PASS: TestVirtualInstanceIntegration/TestVirtualInstance_3_Query (0.13s)

--- PASS: TestVirtualInstanceIntegration/TestVirtualInstance_4_Unmount (0.00s)

--- PASS: TestVirtualInstanceIntegration/TestVirtualInstance_5_Suspend (0.00s)

=== RUN TestVirtualInstanceAutoScaling

--- PASS: TestVirtualInstanceAutoScaling (0.00s)

PASS

ok github.com/rockset/rockset-go-client 1.057s

While running the same test suite in recording mode takes over 4 minutes.

Updated 12 months ago